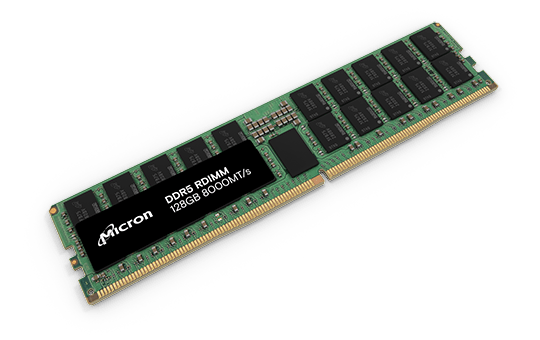

Micron Leads with First 8000 MT/s DDR5 RDIMM for AI

Micron has announced that it is the first to validate and deliver 128GB DDR5 RDIMM memory modules based on 32Gb DRAM chips on leading server platforms, thereby setting an industry benchmark. These modules are mass-produced using the latest 1-beta process node and, compared to 3DS TSV products, offer a 45% increase in density, a 22% improvement in energy efficiency, and a 16% reduction in latency.

Image Source: Micron

Praveen Vaidyanathan, Vice President and General Manager of the Compute Products Group at Micron stated, “With this latest volume shipment milestone, Micron continues to lead the market in providing high-capacity RDIMMs that have been qualified on all the major CPU platforms to our customers. AI servers will now be configured with Micron’s 24GB 8-high HBM3E for GPU-attached memory and Micron’s 128GB RDIMMs for CPU-attached memory to deliver the capacity, bandwidth and power-optimized infrastructure required for memory intensive workloads.”

Micron believes that these new high-performance, high-capacity memory modules are specifically designed to meet the performance needs of various critical mission applications in data centers, including Artificial Intelligence (AI) and Machine Learning (ML), High-Performance Computing (HPC), In-Memory Databases (IMDB), as well as efficiently processing multi-threaded, multi-core counting general computing workloads. These products are set to be widely applied in server CPUs in the future and have garnered support from industry manufacturers such as AMD, HPE, Intel, and Supermicro, establishing a robust ecosystem.

Micron has already commenced the supply of 128GB DDR5 RDIMM memory modules and will make them available through selected global distributors and dealers by June 2024.