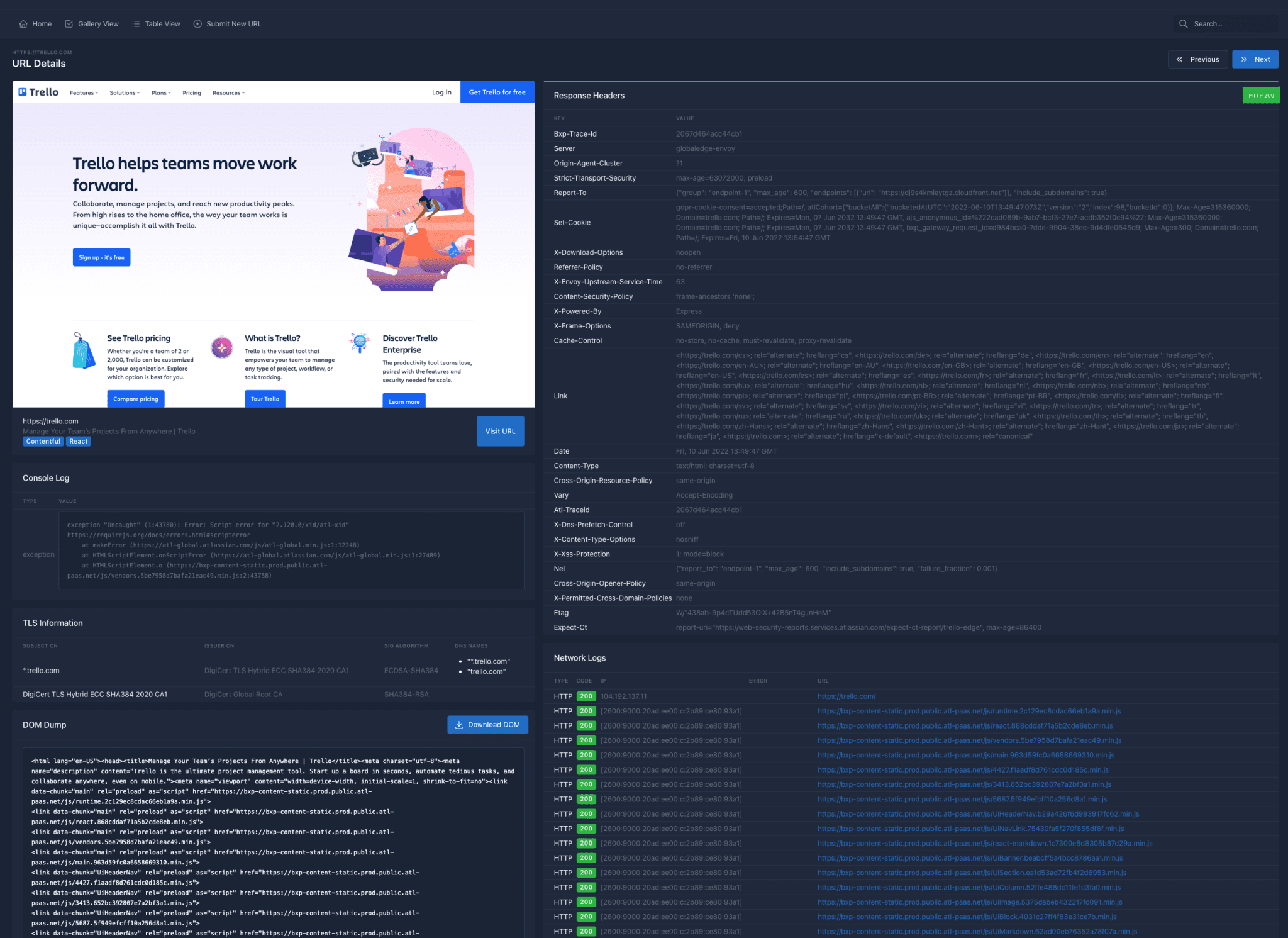

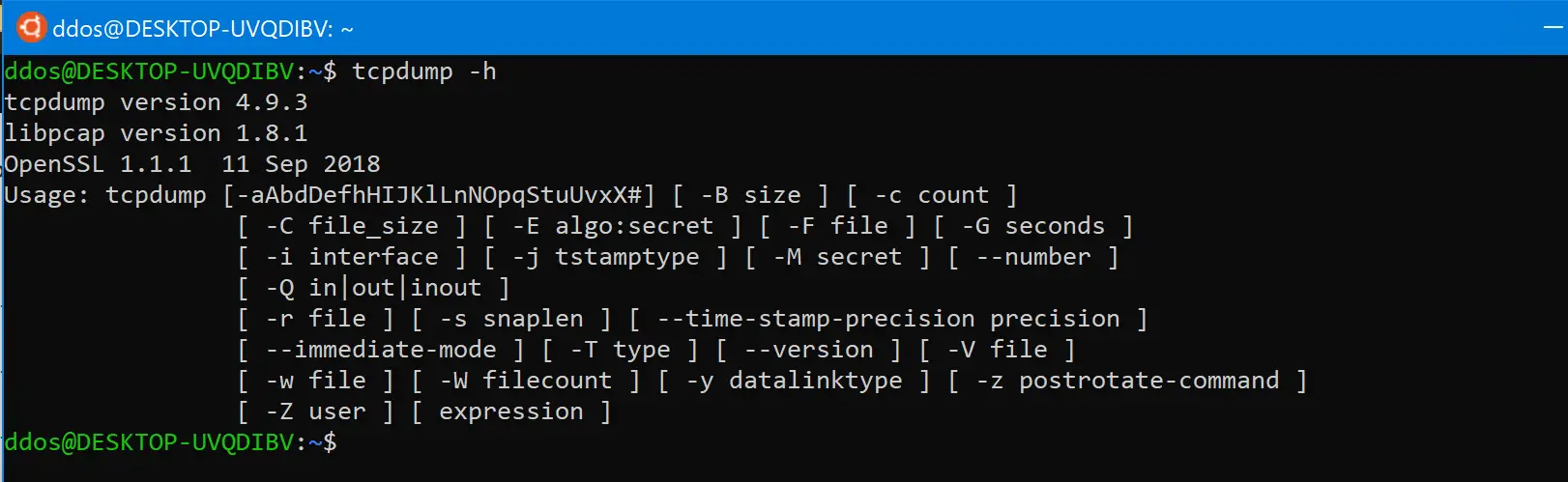

How to count http requests with tcpdump

The statistics of http requests here refer to the statistics of QPS (Queries-per-second), statistics of the top ten most visited URLs. Generally, when doing such statistics, we often use website visit logs to make statistics. When we come to an unfamiliar server environment, we need to immediately count the top ten most visited URLs immediately to determine whether there is an attack. Using tcpdump is much simpler because we don’t need to care where the website logs are. Whether the website log is open or not, use tcpdump to directly capture the current http packet and further filter it to get the statistics we want. This function has been integrated into EZHTTP.

Capturing a 10-second packet:

tcpdump -i eth0 tcp[20:2]=0x4745 or tcp[20:2]=0x504f -w /tmp/tcp.cap -s 512 2>&1 &

sleep 10

kill `ps aux | grep tcpdump | grep -v grep | awk ‘{print $2}’`

This command means that the network card eth0 is monitored, tcp is captured, and the 21-22 byte characters are GE or PO, which means that the packet matching the GET or POST request is written to the /tmp/tcp.cap file.

At this time we get the latest 10-second binary packet file. Our next step is to find the GET / POST URL and Host through the strings command.

strings /tmp/tcp.cap | grep -E “GET /|POST /|Host:” | grep –no-group-separator -B 1 “Host:” | grep –no-group-separator -A 1 -E “GET /|POST /” | awk ‘{url=$2;getline;host=$2;printf (“%s\n”,host””url)}’ > url.txt

At this time, we have all the access URLs for nearly 10 seconds, and the following statistics are easy to obtain, such as:

(( qps=$(wc -l /tmp/url.txt | cut -d’ ‘ -f 1) / 10 ))

grep -v -i -E “\.(gif|png|jpg|jpeg|ico|js|swf|css)” /tmp/url.txt | sort | uniq -c | sort -nr | head -n 10