Hugging Face Vulnerability Exposes AI Models to Attack

Cybersecurity firm HiddenLayer has uncovered a vulnerability in the Safetensors conversion service by Hugging Face, which permits an attacker to intercept AI models uploaded by users, thereby compromising the supply chain.

According to HiddenLayer’s report, an attacker can issue malicious merge requests from the Hugging Face service to any repository on the platform, as well as intercept any models transmitted through the conversion service. This technique paves the way for the modification of any repository on the platform, disguising it as the conversion bot.

Hugging Face is a popular collaboration platform that aids users in storing, deploying, and training pre-trained machine learning models and datasets. Safetensors is a format developed by the company for secure tensor storage.

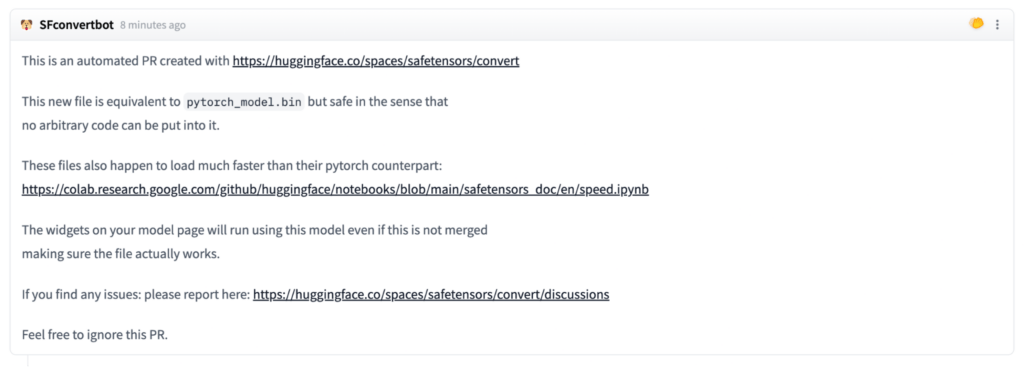

The SafeTensors conversion bot “SFconvertbot” issuing a pull request to a repo

HiddenLayer’s analysis revealed that a cybercriminal could use a malicious PyTorch binary file to intercept the conversion service and compromise the system on which it is hosted. Furthermore, the token of the official SFConvertbot, designed to create merge requests, can be stolen to send malicious requests to any repository on the site, allowing the attacker to tamper with models and embed backdoors within them.

The researchers note that an attacker can execute any arbitrary code when a user attempts to convert their model, remaining undetected by the user. If the victim tries to convert their private repository, this could lead to the theft of the Hugging Face token, access to internal models and datasets, and their potential “poisoning.”

The issue is exacerbated by the fact that any user can send a conversion request for a public repository, opening the possibility for the interception or alteration of widely used models, creating a significant risk to supply chains.