Google has released a number of accessibility features to Google Maps, Google Assistant or Pixel phones

Google has recently incorporated novel auxiliary features into its suite of applications: Google Maps, Google Search, and Google Assistant. These enhancements aim to elevate user convenience, making everyday life seamlessly integrated with Google’s offerings.

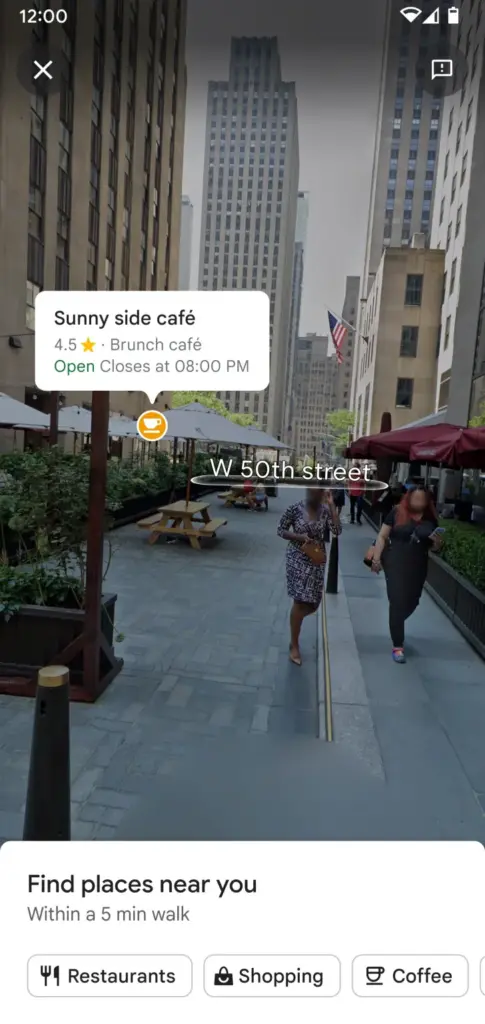

Last year, cities like Los Angeles, New York, and San Francisco witnessed the introduction of the ‘Search with Live View’ function on Google Maps. This augmented reality feature empowers users to intuitively locate nearby attractions, restaurants, and other points of interest. With the latest update, this feature has been rebranded as ‘Lens in Maps’. This innovation aids users, especially those unfamiliar with their surroundings, in effortlessly identifying public transportation stops, eateries, and ATM locations. Furthermore, a screen reader feature has been integrated, providing visually impaired users with audio descriptions of their desired destinations.

Currently, this feature is exclusively available for iOS versions of Google Maps, with an anticipated rollout for Android. When employing this feature, users simply tap the camera icon on their screens, holding their phones upright. This allows the device’s camera to capture the scene ahead, which the system then interprets and explains.

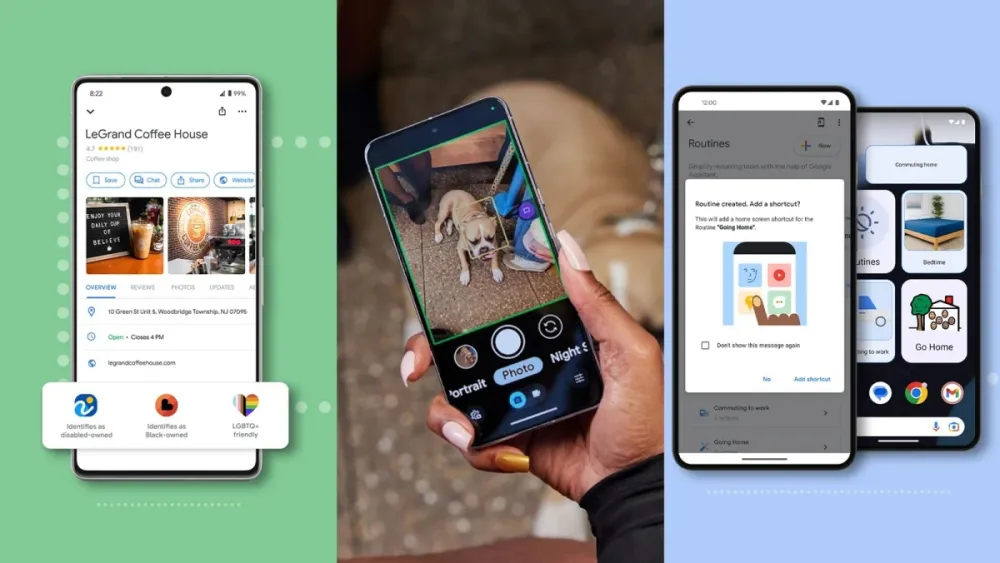

Moreover, Google has introduced wheelchair accessibility indicators for Google Maps across both Android and iOS platforms globally. This enhancement facilitates users in identifying wheelchair-friendly routes, making it immensely beneficial for those in wheelchairs or travelers with bulky luggage. Additionally, Google Maps’ business listings now feature labels indicating whether establishments are operated by individuals with disabilities, Asians, Black individuals, or Latinos, as well as other designations such as female, elderly, or LGBTQ+ operators. These inclusions ensure that visitors approach such establishments with due respect.

Among other upgrades, Google Assistant Routines now boasts more customizable settings like tailored shortcut icons and display block sizes. Google has also optimized the Chrome browser’s search bar by refining its spell-check and recommended website display features. These enhancements have been extended to both Android and iOS versions of the Chrome browser, streamlining user browsing experiences.

Furthermore, to address the needs of the visually impaired community, Google has unveiled an app named ‘Magnifier’. Developed in collaboration with the Royal National Institute of Blind People and the American Council of the Blind, this application emulates the functionality of a magnifying glass. Compatible with Google Pixel 5 and subsequent models (excluding Pixel Fold), users can utilize this app to decipher minute text in books and newspapers or discern distant signs. Additional features such as filters, brightness, and contrast adjustments further enhance the visual experience.

In reference to an earlier release, the Guided Frame photography assistant app, designed with audio, tactile vibration cues, and heightened contrast displays, aids those with low vision in capturing well-framed photographs. A recent update to this app has incorporated facial recognition capabilities, enabling visually impaired individuals to effortlessly take selfies. They can also conveniently photograph pets, meals, and documents that need to be archived. This function is currently available on the Pixel 8 series and is expected to be accessible on the Pixel 6 series and subsequent models.