Stanford University released transparency indicators for 10 large-scale natural language models

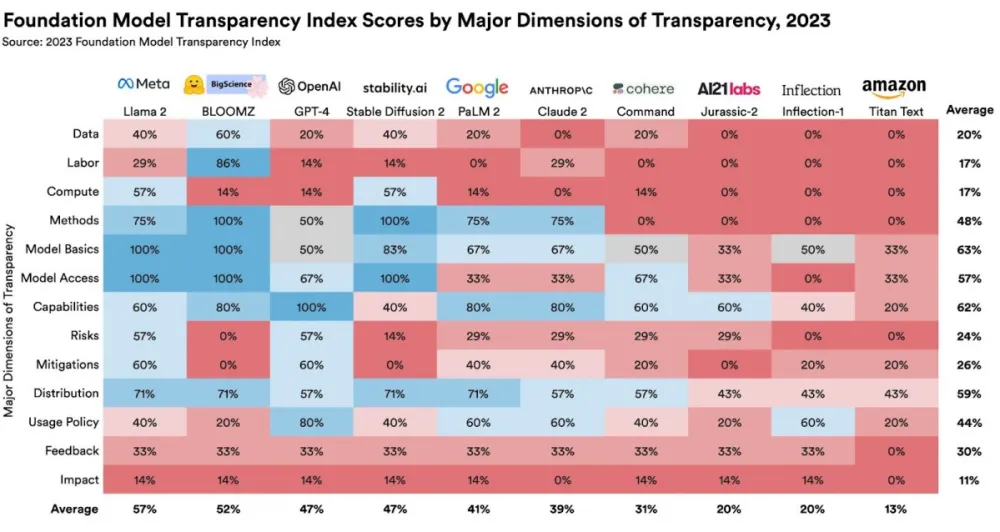

The illustrious Stanford University’s Institute for Human-Centered Artificial Intelligence (Stanford HAI) recently unveiled a transparency index for ten widely adopted large-scale natural language processing models. Topping the list was Meta’s Llama 2, while Amazon’s Titan Text languished at the nadir. OpenAI’s GPT-4 claimed the fourth spot, trailed by Google’s PaLM 2 in fifth, which followed Stability.ai in ranking.

However, the report elucidates that despite Llama 2’s preeminence among the ten models in terms of transparency, its actual transparency quotient stands at a modest 54%. Google’s PaLM 2 possesses a mere 40% transparency, and the commercially employed Titan Text from Amazon displays an even more parsimonious transparency score of 12%.

The methodologies employed in deriving this transparency index encompassed a spectrum of criteria: from whether companies have disclosed operational modalities, scale, and architecture of the models, to the presence of monitoring and remedial measures. Higher transparency indicates a greater degree of trustworthiness for end-users in these substantial natural language models. From the vantage of the foundational model research center at Stanford HAI, which orchestrated this index, the extant large-scale models, integral to the index, fall short of garnering unequivocal trust. They counsel against corporate entities or governmental institutions harnessing such models to sculpt services.

Stanford HAI meticulously crafted approximately 100 criteria to appraise the transparency of these gargantuan natural language models. Roughly a third of these benchmarks assess the processes by which the models were conceived, the data used in their training, and the human resources invested in their creation. Another third delves into the model’s actual operational performance, its reliability, associated risks, and potential ameliorations. The remaining criteria scrutinize the policies of the entities providing the models, and whether assistance is proffered in instances when the model’s ramifications are palpable.