AIGoat: A deliberately Vulnerable AI Infrastructure

With the rise of AI infrastructures, the rise of attacks on such vulnerable infrastructure is inevitable. Insecure AI infrastructure can expose organizations to significant risks, including data breaches and manipulation of AI-driven decisions. Often, these vulnerabilities arise from misconfigurations or overlooked security flaws in AI models, applications and deployment environments.

AI-Goat is a deliberately vulnerable AI infrastructure hosted on AWS, designed to simulate the OWASP Machine Learning Security Top 10 risks (OWASP ML Top 10). AI-Goat replicates real-world AI cloud deployment environments but introduces vulnerabilities intentionally to provide a realistic learning platform. It features multiple attack vectors and is focused on a black-box testing approach. By using AI-Goat, users can learn and practice:

- AI Security Testing/Red-teaming

- AI Infrastructure as Code

- Understanding vulnerabilities in AI application infrastructure

- Identifying risks in the services and tools that interact with AI applications

The project is structured into modules, representing a distinct AI application with different tech stacks. AI-Goat leverages IaC through Terraform and GitHub Actions to simplify the deployment process.

Vulnerabilities

The project aims to cover all major vulnerabilities in the OWASP Machine Learning Security Top 10. Currently, the project includes the following vulnerabilities/risks:

- ML02:2023 Data Poisoning Attack

- ML06:2023 AI Supply Chain Attacks

- ML09:2023 Output Integrity Attack

Tech Stack

- AWS

- React

- Python 3

- Terraform

Challenges

All challenges within AI-Goat are part of a toy store application featuring different machine learning functionalities. Each challenge is designed to demonstrate specific vulnerabilities from the OWASP Machine Learning Security Top 10 risks.

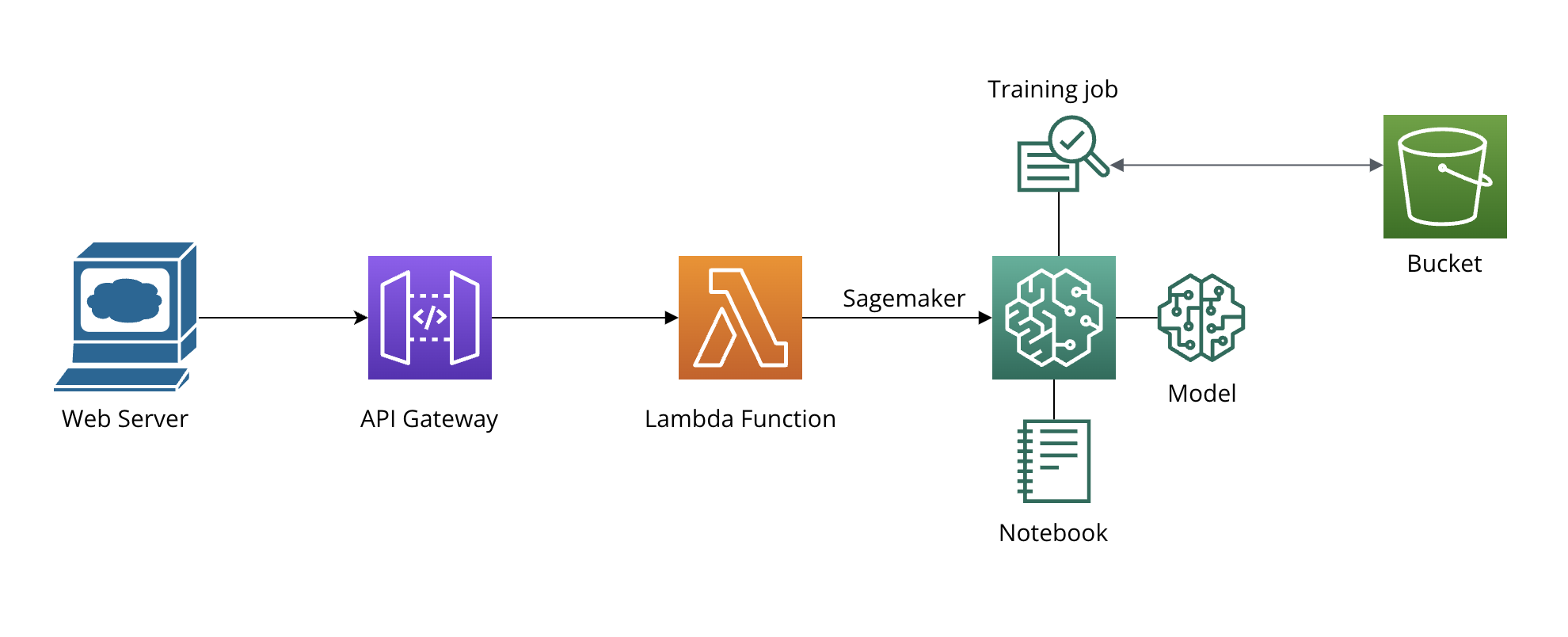

Machine Learning Modules Architecture:

Challenge 1: AI Supply Chain Attacks This challenge features a product search page within the application. Users can upload an image, and the ML model calculates and displays the most similar products. This module highlights vulnerabilities in the AI supply chain, where attackers can compromise the pipeline due to a vulnerable package.

Goal: Compromise the product search functionality using the file upload option to get the sensitive information file.

Challenge 2: Data Poisoning Attack This challenge involves a custom product recommendations model. When a user is logged in, the cart page displays personalized product recommendations. The challenge showcases vulnerabilities in the AI model training process and dataset, allowing attackers to poison the data and manipulate recommendations.

user: babyshark

password: doodoo123

** Note: When attempting to upload bucket resources using AWS CLI, you will need to use the flag –acl bucket-owner-full-control since the bucket enforces it **

Challenge 3: Output Integrity Attack For this challenge, the application features a content and spam filtering AI system that checks each comment a user attempts to add to a product page. The AI determines whether the comment is allowed or not. This module demonstrates vulnerabilities in the model output integrity, where attackers can manipulate the AI system to bypass content filtering.

Goal: Bypass the content filtering AI system to post on the Orca Doll product page the forbidden comment: