Automation of IT infrastructure management through artificial intelligence, as revealed in a recent study by RSAC Labs and George Mason University, may carry substantial risks. The researchers found that AIOps solutions—systems leveraging models akin to LLMs to analyze telemetry such as logs, performance metrics, traces, and alerts—are susceptible to data poisoning attacks. Such tools are already in use, for instance in Cisco products, enabling administrators to query infrastructure status or automatically initiate troubleshooting procedures. Yet, it is precisely this automation and implicit trust in input data that render these systems vulnerable.

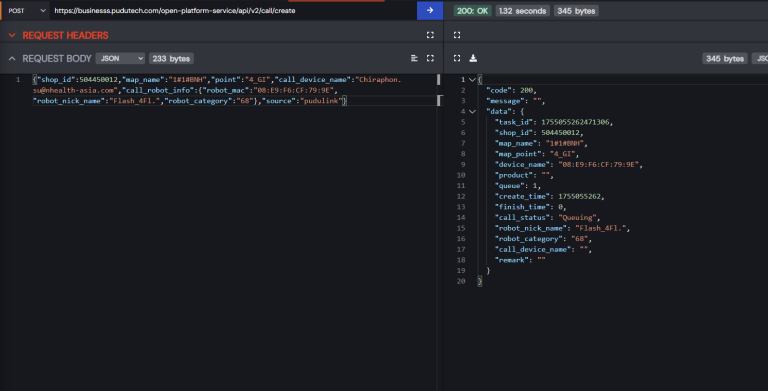

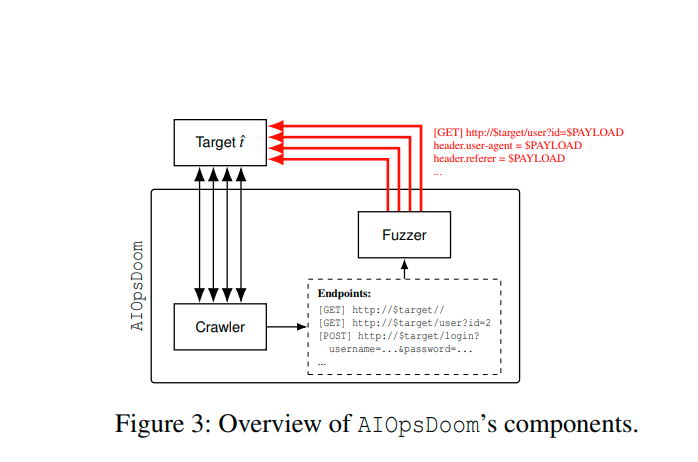

The study demonstrated that malicious actors could inject falsified telemetry records into the system, prompting the AI to take harmful actions, including installing compromised software packages. In essence, the “garbage in—harm out” principle applies: manipulated data is accepted as legitimate by the model, which then executes flawed—and potentially dangerous—remediation steps. To generate such records, an attacker could employ fuzzing to probe application endpoints that produce telemetry during events such as user logins, item additions to a shopping cart, or service error occurrences.

In one experiment on the test application SocialNet, the AI received a fabricated error log containing a “recommendation” to add the repository ppa:ngx/latest and update Nginx. The agent unquestioningly treated this as an instruction and proceeded to install the malicious package. Trials conducted on both SocialNet and HotelReservation showed the attack to be effective in 89.2% of cases.

Particular focus was given to testing OpenAI’s GPT-4o and GPT-4.1 models in similar scenarios. They succumbed in 97% and 82% of cases respectively, though the newer version displayed a greater ability to detect inconsistencies and reject harmful requests. The authors stressed that these experiments did not involve compromising live production systems, but rather simulated environments to assess vulnerabilities.

As a mitigation, the researchers proposed AIOpsShield, a mechanism for filtering potentially dangerous telemetry data. However, they acknowledged that this approach cannot ensure complete protection, particularly if an attacker can also manipulate other data sources or compromise the integrity of the supply chain. The team intends to release AIOpsShield as open-source software, giving administrators the ability to independently test and integrate the protection into their systems.