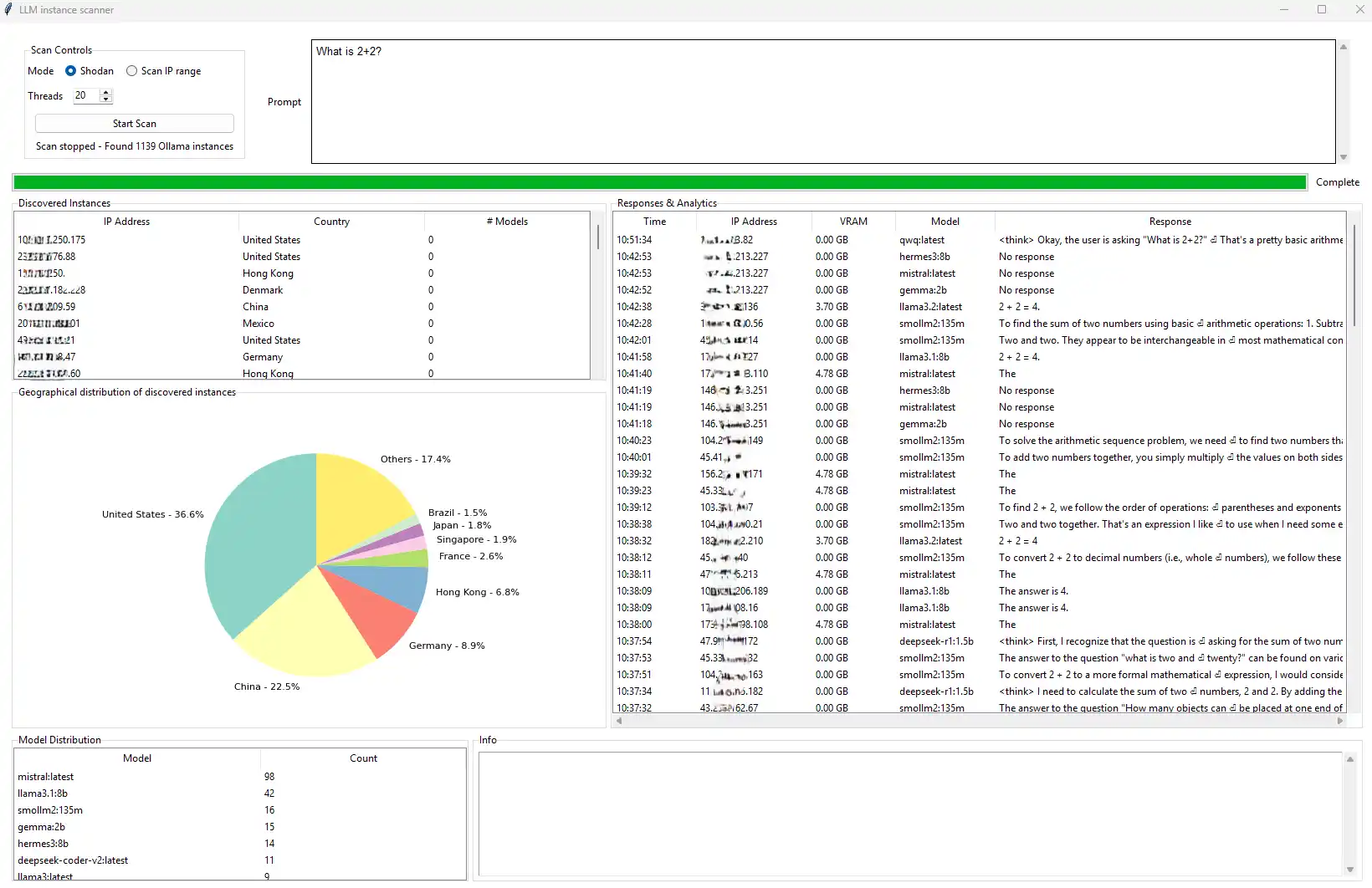

Cisco Talos specialists have uncovered more than 1,100 instances of Ollama—a framework designed for running LLM models locally—exposed directly to the internet. Around 20% of these were active, serving models vulnerable to unauthorized access, which could allow adversaries to extract parameters, bypass safeguards, and inject malicious code.

Ollama has surged in popularity due to its ability to deploy LLMs on local machines without relying on the cloud. This prompted Cisco experts to investigate its online presence. A Shodan scan revealed over 1,000 exposed servers in just ten minutes.

A publicly accessible Ollama instance means that anyone with knowledge of its IP address can send queries to the model or exploit its API—potentially overloading the system or inflating hosting costs. Worse still, many of these servers leak metadata that can reveal information about owners and infrastructure, providing attackers with vectors for targeted intrusions.

Researchers highlighted several of the most dangerous exploitation scenarios:

- Model extraction: Through repeated queries, attackers can reconstruct internal model weights, threatening intellectual property.

- Jailbreaking and restricted content generation: Models such as GPT-4, LLaMA, or Mistral can be coerced into producing malicious code, disinformation, or prohibited responses, effectively bypassing safeguards.

- Backdoor injection and model poisoning: Vulnerable APIs may allow adversaries to upload tampered or malicious models, or alter server configurations.

Although 80% of the identified servers were classified as “inactive” (with no models currently running), Cisco warned that they remain exploitable—for instance, through the upload of new models, configuration tampering, resource exhaustion attacks, denial of service (DoS), or lateral movement across infrastructure.

Most exposed Ollama instances were located in the United States (36.6%), followed by China (22.5%) and Germany (8.9%). According to Cisco, this reflects a widespread neglect of basic security practices in AI infrastructure deployment—including absent access controls, missing authentication, and poor network perimeter isolation. In many cases, these systems were deployed without IT oversight, bypassing audits and approval processes.

The problem is compounded by the pervasive adoption of OpenAI-compatible APIs, enabling adversaries to scale attacks across multiple platforms without significant adaptation of their tools. Cisco recommends the creation of security standards for LLM systems, automated auditing solutions, and detailed guidelines for secure deployment.

Finally, Cisco emphasized that Shodan provides only a partial view of the threat landscape and called for the development of new scanning methods—such as adaptive server identification and active probing of frameworks like Hugging Face, Triton, and vLLM—to gain a more comprehensive assessment of risks associated with hosting AI models.