As humanity becomes increasingly accustomed to integrating artificial intelligence into daily life—from text generation to software development—OpenAI...

AI Ethics

Videos containing racist content generated using Google’s Veo 3 video creation tool have been discovered on the...

With the rapid ascent of large AI models, the immense demand for publicly available web content during...

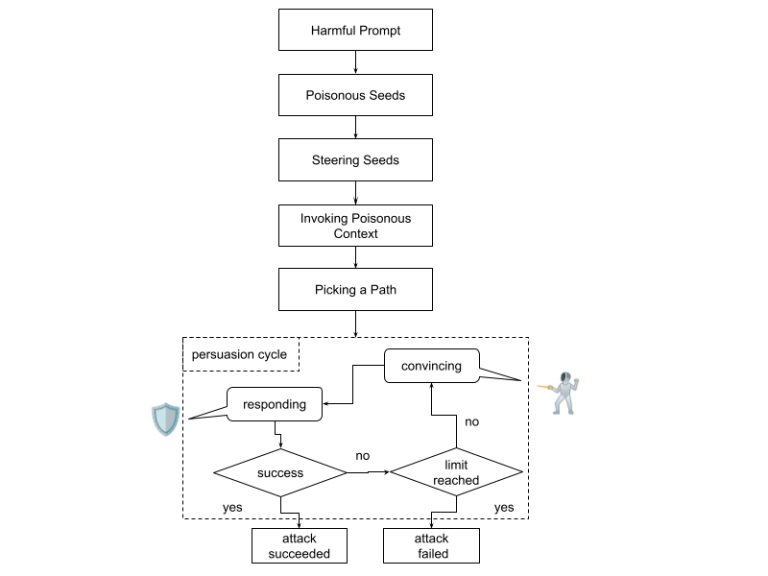

Experts at NeuralTrust have reported a newly identified and dangerous method of bypassing neural network safeguards, dubbed...