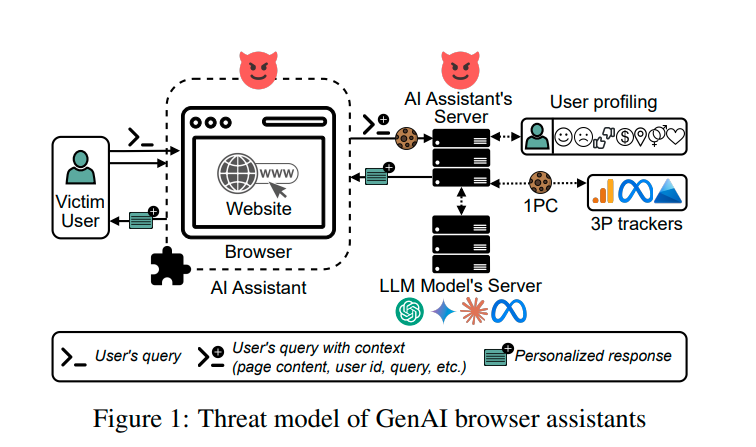

Researchers from University College London and the University of the Mediterranean in Reggio Calabria, Italy, have conducted the first large-scale investigation into privacy practices among generative AI assistants for web browsers, revealing that even the most popular and ostensibly “safe” extensions actively collect and transmit sensitive personal data—often without sufficient safeguards and, in many cases, without the user’s knowledge.

These tools—such as ChatGPT for Google, Merlin, Copilot (unrelated to Microsoft’s application of the same name), Monica, Sider, TinaMind, and others—embed generative artificial intelligence directly into the browser, offering answers to queries, page summarization, automated site navigation, and execution of complex workflows. Yet this integration also grants them sweeping capabilities to track activity and build detailed profiles of their users.

For the study, the researchers simulated a realistic online presence, creating the persona of a “wealthy millennial from California” and using it while engaging with these assistants for everyday activities—from information searches to online banking transactions. They found that some extensions transmitted the full content of webpages to their servers, including everything visible on the screen. In the case of Merlin, even form data—such as online banking credentials or medical information—was sent.

Extensions like Sider and TinaMind not only shared user queries but also identifying parameters such as IP addresses with external analytics services, including Google Analytics. This opens the door to cross-site tracking and targeted advertising. Notably, only one assistant—Perplexity—showed no signs of personalization or data collection for profiling.

Some tools, including ChatGPT for Google, Copilot, Monica, and Sider, analyzed user behavior to infer age, gender, income level, and interests, applying these insights to tailor responses even in subsequent sessions. The problem is compounded by the fact that most such assistants do not employ local models within the browser but rely on remote APIs, which may be triggered automatically without explicit user actions.

Requests to these services often involve transmitting the entire HTML structure of the page (DOM) and, at times, form data. Tests further revealed that certain extensions, including Merlin and Sider, continued to log user activity even in private browsing mode—undermining the very purpose of that feature.

The study’s authors stress that these capabilities grant unprecedented access to areas of online activity traditionally considered private, necessitating urgent regulatory oversight. While the testing was conducted in the United States and did not assess compliance with UK or EU laws such as the GDPR, the researchers believe that most of the practices identified would likely violate the more stringent standards of those jurisdictions.