Artificial intelligence systems have often been criticized for producing convoluted vulnerability reports and overwhelming open-source developers with irrelevant complaints. Yet researchers from Nanjing University and the University of Sydney have presented a striking counterexample: Agent A2, a system designed to discover and validate vulnerabilities in Android applications by emulating the workflow of a human bug hunter. This project builds upon their earlier work, A1, which specialized in exploiting flaws in smart contracts.

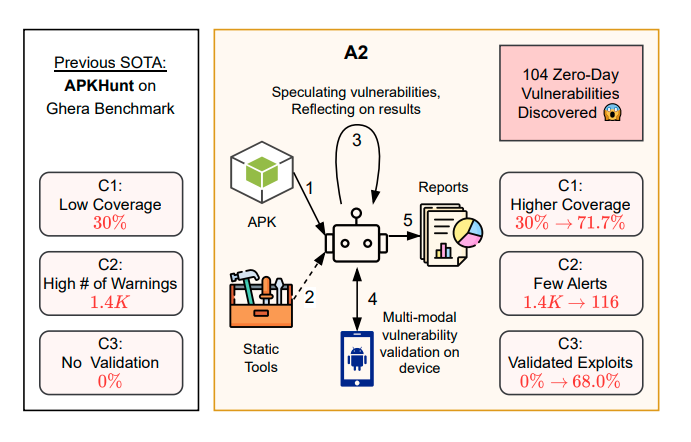

According to the authors, A2 achieved a 78.3% coverage rate on the Ghera benchmark suite, significantly outperforming the static analyzer APKHunt, which managed only 30%. When tested on 169 real APKs, it uncovered 104 zero-day vulnerabilities, with 57 confirmed through automatically generated working exploits. Among these was a medium-severity flaw in an application with over 10 million installs—an intent-redirection bug that could allow malicious software to hijack execution flow.

The defining innovation of A2 is its validation module, absent in its predecessor. Whereas A1 relied on a rigid scheme that merely assessed whether an exploit could yield profit, A2 validates vulnerabilities step by step, breaking the process into discrete tasks. One example involved an app where an AES key was stored in plaintext. A2 first detected the key in strings.xml, then used it to generate a forged password reset token, and finally confirmed that the token successfully bypassed authentication. Each stage was accompanied by automated verification—matching values, confirming app activity, and rendering the correct address on the screen.

To function, A2 orchestrates multiple commercial large language models—OpenAI o3, Gemini 2.5 Pro, Gemini 2.5 Flash, and GPT-oss-120b—each assigned a distinct role: a planner devises the attack strategy, an executor performs the actions, and a validator confirms the outcome. This architecture, the authors argue, mirrors human methodology, reducing noise and increasing the share of validated findings. Traditional analyzers often generate thousands of low-value alerts with few real threats, whereas A2 consistently demonstrates exploitability.

The team also analyzed cost efficiency. Detection alone ranges from $0.0004 to $0.03 per application, depending on the models used, while a full detection-and-validation cycle averages $1.77. Exclusively relying on Gemini 2.5 Pro raises the cost to $8.94 per bug. By comparison, researchers at the University of Illinois showed in 2024 that GPT-4 could generate an exploit from a vulnerability description for $8.80. Thus, the expense of identifying and validating flaws in mobile apps with A2 is on par with the price of a single medium-severity vulnerability in bug bounty programs, where rewards typically run into the hundreds or thousands of dollars.

The researchers emphasize that A2 already surpasses traditional static analyzers for Android apps, while A1 is nearing top-tier performance in smart contract testing. They believe this approach could greatly accelerate the work of both defenders and attackers, since instead of painstakingly developing custom tools, one can simply call APIs of pretrained models. The concern, however, is that bounty hunters might exploit A2 for rapid profit, while many flaws lie outside the scope of existing reward programs—leaving attackers free to weaponize them.

The authors conclude that this field is only beginning to evolve, and a surge in both defensive and offensive applications is likely imminent. Industry experts note that systems like A2 shift vulnerability discovery away from endless alarms toward confirmed findings, reducing false positives and enabling focus on genuine risks. For now, the source code is restricted to vetted research partners, in an attempt to balance open science with responsible disclosure.