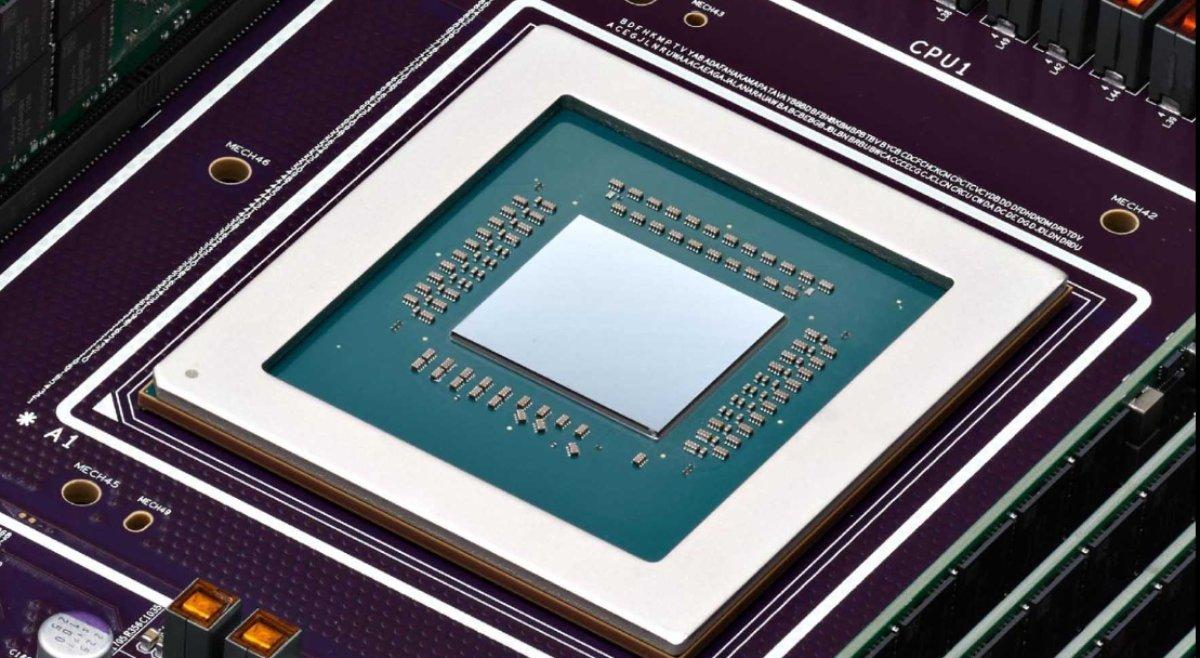

OpenAI has begun utilizing Google’s TPU chips in place of traditional Nvidia GPUs in an effort to reduce operational costs and lessen its dependence on a single supplier. According to Reuters, this marks the first instance in which the company has leased computing power from Google Cloud, diverging from its historical reliance on Microsoft and Oracle infrastructures powered by Nvidia graphics accelerators.

Until recently, OpenAI’s entire operation—from training to maintaining ChatGPT—was built upon Nvidia GPUs. Now, the company is incorporating a new major partner to mitigate risks stemming from supply constraints and the monopolistic dominance of a single vendor. Although Google has yet to offer its most advanced TPUs for external use, the available models are sufficiently capable of handling inference tasks both efficiently and more economically than Nvidia’s hardware. The primary impetus behind this shift lies in reducing inference costs—the phase where the model actively engages with users.

This partnership holds considerable significance for Google as well. For the first time, the company is extending its TPU chips beyond internal use to serve external clientele. OpenAI joins the ranks of prominent adopters such as Apple, Anthropic, and Safe Superintelligence. Google is steadily solidifying its presence in the cloud computing sector and increasingly positioning itself as a formidable rival to Nvidia, offering more affordable alternatives and seeking to reshape the competitive landscape of AI chip technologies.

The agreement between OpenAI and Google epitomizes a broader trend: the artificial intelligence industry is becoming less centralized, with key players prioritizing agility and diversification. This evolution is poised to influence hardware pricing, accelerate technological innovation, and redefine the balance of power within the AI ecosystem.